This article should have been about sampling profilers, and how it may skew results.

I wanted to write code to have a thread do a bit of work (under the millisecond) and sleep a bit so that the work+sleep duration would be around 0.5-1ms. This meant 1000-2000 iterations per second.

But to do that, I had to first find a primitive that would allow such precision.

And that’s how I ended up following Alice into the rabbit-hole of “high resolution” timers.

My setup

Before anything, let me state that my setup is the following:

- OS: Windows 10 22H2 build 19045.5965

- CPU: AMD Ryzen 7 7745HX (8cores 16threads, SMT ON)

This was also tested on another machine with the following specs and gave similar results:

- OS: Windows 11 24H2 build 26100.4349

- CPU: AMD Ryzen 7 7435HS (8cores 16threads, SMT ON)

How to sleep with high resolution on Windows

Sleep

Windows provides many primitives to make a thread sleep. The most obvious one is calling Sleep.

But it takes as argument milliseconds, which means it could not sleep for less than a millisecond and would not work with my use case. And it is actually even worse than this: even though it accepts input such as 2ms (Sleep(2)), it is limited by the OS timers, which by default are around 15.6ms.

This is well documented in the remarks of the documentation:

The system clock “ticks” at a constant rate. If dwMilliseconds is less than the resolution of the system clock, the thread may sleep for less than the specified length of time. If dwMilliseconds is greater than one tick but less than two, the wait can be anywhere between one and two ticks, and so on. To increase the accuracy of the sleep interval, call the

timeGetDevCapsfunction to determine the supported minimum timer resolution and thetimeBeginPeriodfunction to set the timer resolution to its minimum. Use caution when callingtimeBeginPeriod, as frequent calls can significantly affect the system clock, system power usage, and the scheduler.

As mentioned, we could try and use timeBeginPeriod to (supposedly) obtain a 1ms resolution on the timer. I say supposedly because Bruce Dawson has blogged for years about why you should avoid using it. It’s also now well documented that the kernel will now do whatever it wants if the application does not play audio or its window is not displayed:

Starting with Windows 11, if a window-owning process becomes fully occluded, minimized, or otherwise invisible or inaudible to the end user, Windows does not guarantee a higher resolution than the default system resolution.

Wait* functions

WaitForObject and its cousins allow the user to wait for an object being signaled. Usually you would provide a handle to some kind of I/O operation or synchronization object.

While its timeout argument suffers from the exact same issues as Sleep if you can obtain some object that is scheduled precisely, then you could in theory obtain higher resolution.

There are a few sources of events one could think of:

- DXGI Swap chain events

SetMaximumFrameLatency - WASAPI Audio client event

IAudioClient::SetEventHandle - Waitable timers

CreateWaitableTimerExW - Some custom driver event

Since the first two options are related to video/audio, and the last one would be too complex to setup widely, let’s use the waitable timers.

Waitable timers initially were also linked to the system clock ticks, but since Windows10 v1803 we now have the CREATE_WAITABLE_TIMER_HIGH_RESOLUTION flag (documented since 2022).

Sounds great? Let’s test it!

Testing our sleep function

We want to run some of our code at a fixed frequency, let’s say 1000Hz (every 1ms). Error handling code is stripped on purpose.

First, let’s create our timer and ask it to run at a frequency of 1ms.

// Create a high resolution timer

HANDLE hTimer = CreateWaitableTimerEx(nullptr, nullptr, CREATE_WAITABLE_TIMER_HIGH_RESOLUTION, TIMER_ALL_ACCESS);

// Then configure it

LARGE_INTEGER dueTime;

dueTime.QuadPart = 0; // Start timer immediately

SetWaitableTimer(hTimer, &dueTime, 1 /*every 1ms*/, nullptr, nullptr, FALSE);Then simply loop over and over by waiting the event and doing a bit of CPU work ( ~90μs on my machine ). We’ll also instrument the code using Tracy for analysis, but you could be using any instrumentation profiler for this.

void AccurateSleep()

{

ZoneScoped(); // Tracy profiler marker to measure the duration of WaitForSingleObject

WaitForSingleObject(hTimer, INFINITE); // Wait for the next timer event to be signaled

}

// ...

while(true)

{

ZoneScopedN("Iteration");

DoSomeWork(); // Unoptimized loop doing multiply-adds on integers

AccurateSleep();

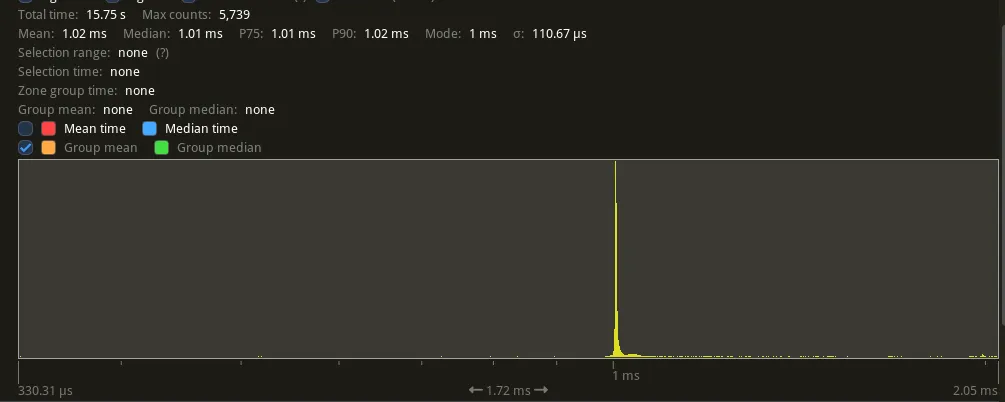

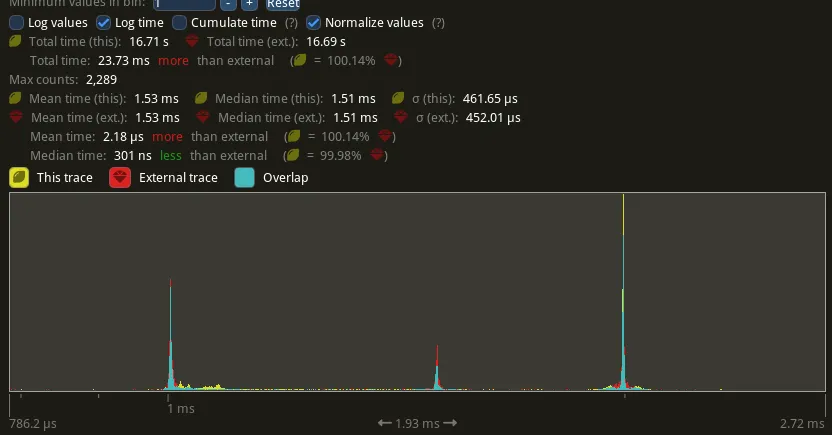

}Let’s see if we got it right by plotting the histogram of the Iteration zones:

Awesome right?

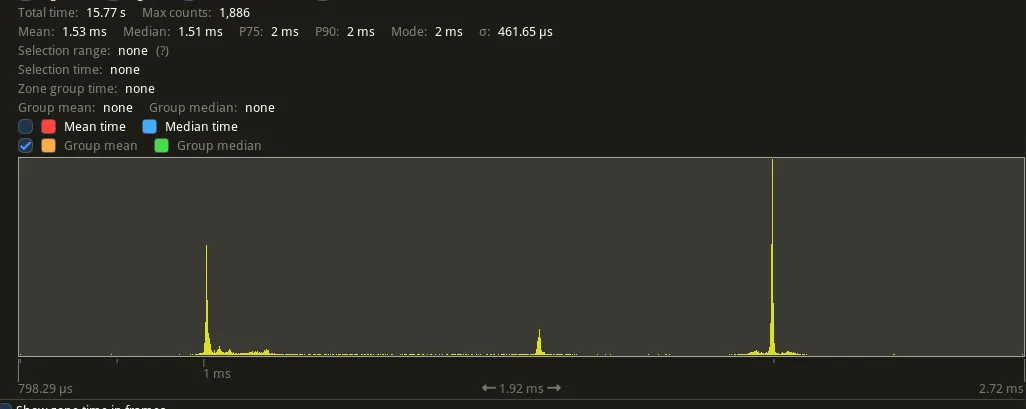

But a good software performance analyst will always check his results again before publishing and repeat the test:

There are now spikes at 1.5 and 2ms. There are actually more of those than the ones at 1ms… What the hell happened here?

The timeBeginPeriod(1) issue all over again

Discord running in the background is what happened!

⚠ In general, you should avoid running other programs in the background when doing performance analysis, but in some cases you actually want to observe your program in its expected environment. For PC games, it happens to be having Discord + a web browser in the background.

While there were changes made in 2020 (See Windows Timer Resolution: The Great Rule Change) that imply that Sleep is no longer impacted by other processes calling timeBeginPeriod()as much as before… It still has an impact here when using waitable timers.

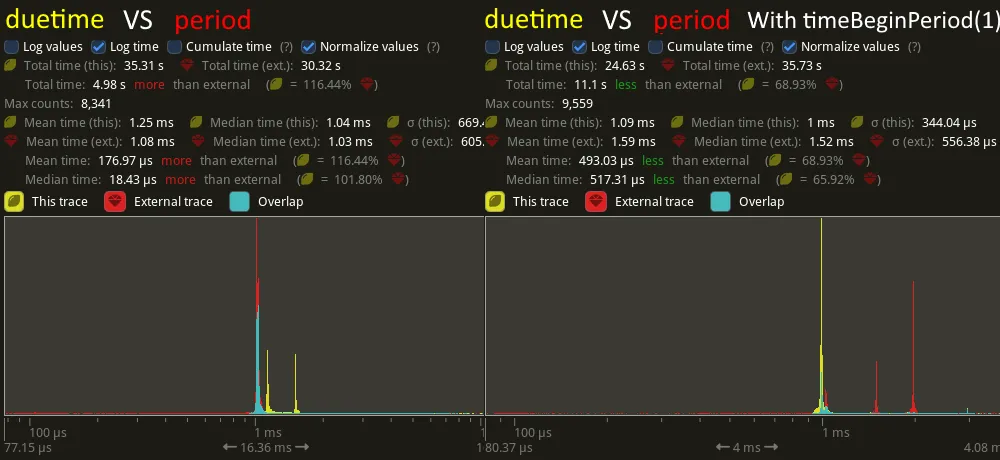

To test the theory, I ran my test on both Windows 10 and 11, with and without timeBeginPeriod(1).

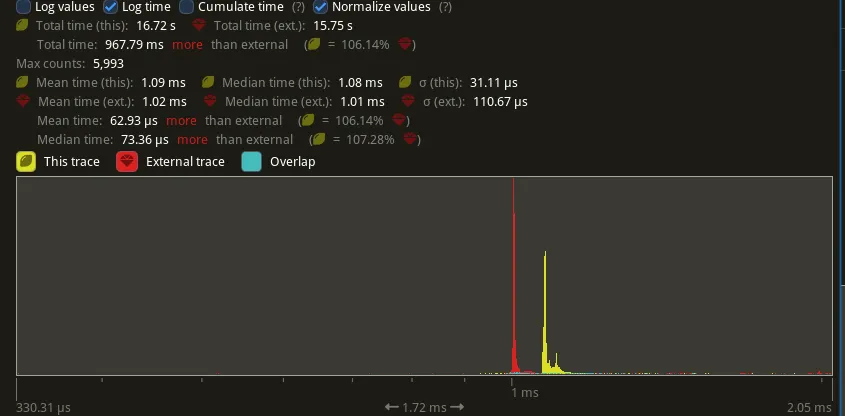

Here is the comparison of the test running with Discord in the background vs the process calling timeBeginPeriod(1) at the beginning:

Damn, we’re back to having something non-deterministic again.

Not only that, but having any program changing Windows timers will greatly affect our precision!

However we did gain new insight: the minimum granularity of the timers seem to be 0.5ms (on my system). This can be confirmed by calling NtQueryTimerResolution.

You can use

powercfg /energy /duration 5to scan and detect if some programs are changing the kernel timers. Note that using high frequency timers, even withouttimeBeginPeriodwill have your program listed in the report with a period of 1ms.

Trying to be smarter than the kernel

Ok so using SetWaitableTimer’s lPeriod parameter does not seem to give a good result. But you can find examples of waitable timers being used with lpDueTime parameter instead of the frequency one!

Let’s give it a try, our code now looks like this:

void AccurateSleep(int useconds)

{

ZoneScoped(); // Tracy profiler marker to measure the duration of WaitForSingleObject

WaitForSingleObject(hTimer, INFINITE); // Wait for the next timer event to be signaled

LARGE_INTEGER dueTime;

// This is in 100s of ns, hence * 10. Negative value means a relative date!

dueTime.QuadPart = -(useconds > 0 ? (useconds * 10) : 1); // Make sure we have values <= -1

SetWaitableTimer(hTimer, &dueTime, 0, nullptr, nullptr, FALSE);

WaitForSingleObject(hTimer, INFINITE);

}

// ...

while (true) {

LARGE_INTEGER startQPC;

LARGE_INTEGER endQPC;

PERF_REGION("Iteration");

QueryPerformanceCounter(&startQPC);

DoSomeWork();

QueryPerformanceCounter(&endQPC);

int64_t durationUs = ((endQPC.QuadPart - startQPC.QuadPart) * 1000000 + /*round up*/(1000000 - 1)) / frequencyQPC.QuadPart;

AccurateSleep(1000 - durationUs);

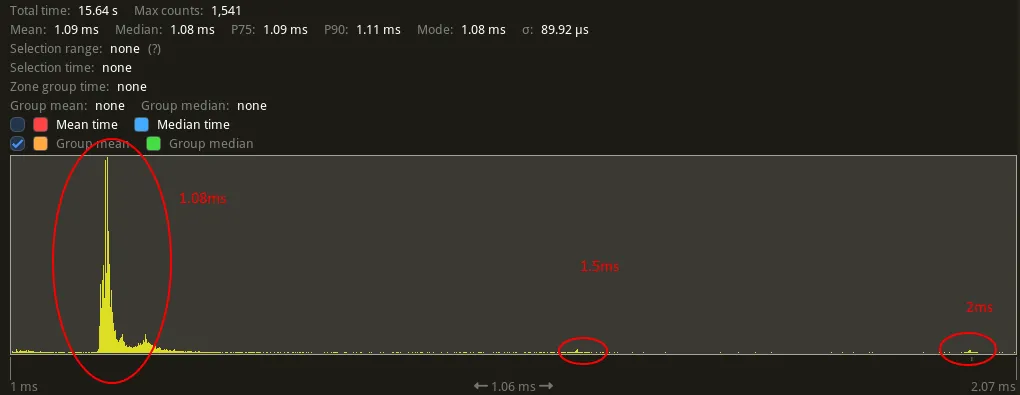

}The results are… surprising. The iteration time is now ~1.08ms, with a few spikes at 1.5ms and 2ms…

While a very few of those are due to the DoSomeWork function being preempted, those timings are mostly explained by WaitForSingleObject now waiting precisely 1ms most of the time. Almost never 0.91ms which is the duration we put in lpDueTime most of the time.

Note: You can obtain waits of 0.5ms if you set

lpDueTimeto a value<= 500.

Since we know that the timer is triggered on 0.5ms boundaries, we can probably afford to ask it to be fired earlier to avoid missing the deadline. This way we can avoid most of the 1.5ms and 2ms values by reducing the sleep duration by, let’s say, 80μs: AccurateSleep(1000 - durationUs - 80);

Results show that we now less often sleep for 1.5ms and 2ms, but it’s still not good, the sleep is mostly snapped to 1ms instead of respecting our ~0.9ms lpDueTime…

So when compared with using the period parameter (red is period, yellow is using duetime), this isn’t good, it’s not doing what we want:

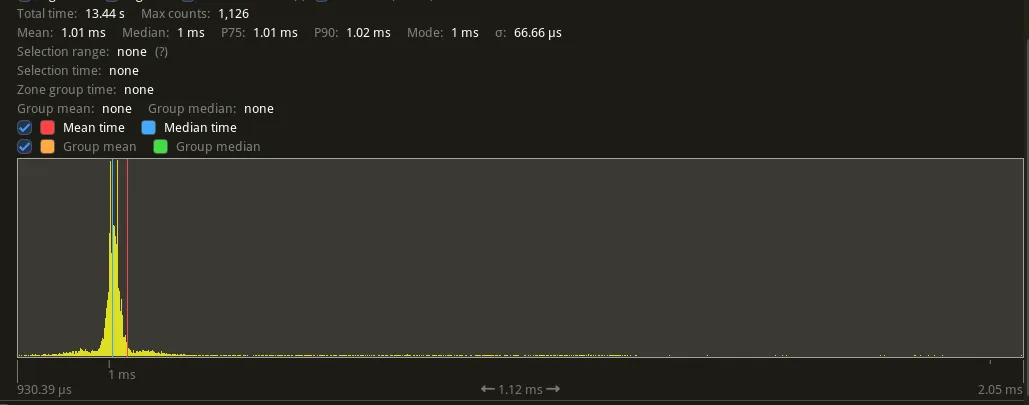

But what happens if we use timeBeginPeriod(1) again? (Or, another process calls it?)

Oh my god.

WaitForSingleObject seems to now be respecting our dueTime? Well… yes and no. What happens is that its duration now snaps to a periodic multiple of 0.5ms.

When there is no process with timeBeginPeriod(1), SetWaitableTimer with a due time will reset the phase of the timer, otherwise it seems to adopt the period and phase from the timeBeginPeriod(1) call! (But sometimes the phase still changes…)

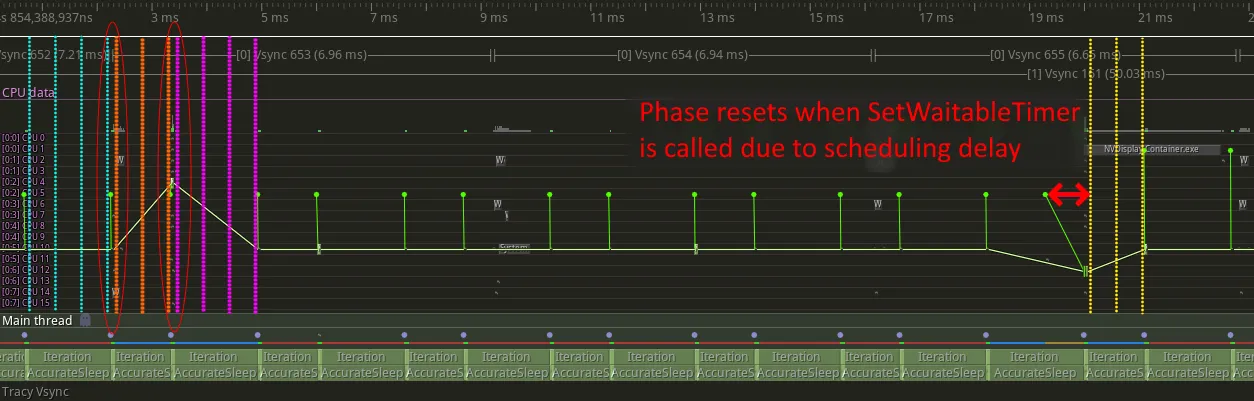

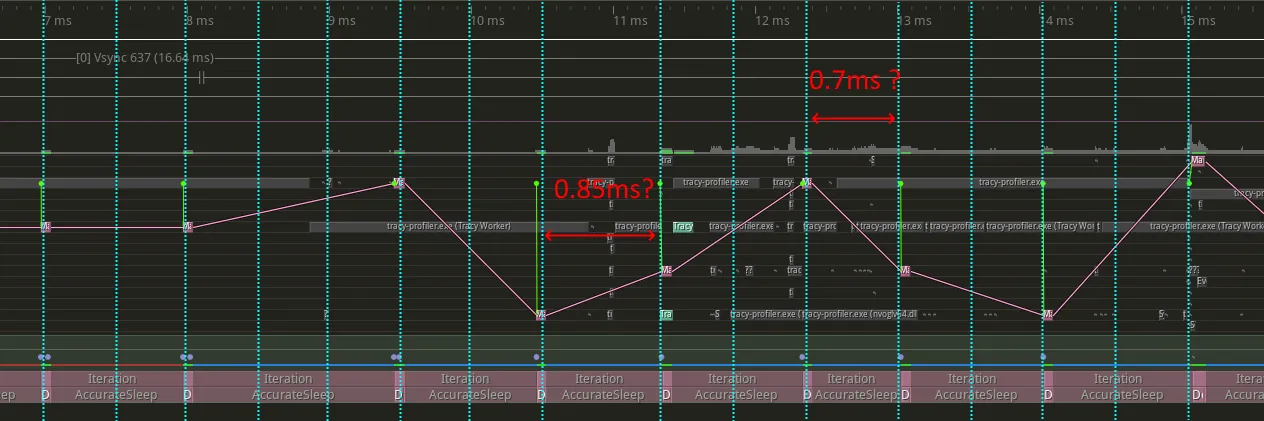

As a picture is worth thousand words, here is a comparison using Tracy’s CPU view. I recently submitted a visualization of the thread wakeup events, which happens to be pretty handy here!

- The green lines represent the thread wakeup event.

- The big green dot represents the point at which the kernel triggered the timer. This is what matters, the thread gets ready to be scheduled but might still be delayed, which is a different problem.

- The vertical dotted lines were added to represent the timer periods I deduced from the trace.

Without any process using timeBeginPeriod(1) and dueTime of 0.55ms:

With a process using timeBeginPeriod(1) and dueTime of 0.55ms:

It is rather difficult to understand what is happening under the hood, and why sometimes the phase seems to change.

I made the following observations and guesses, but so far could not confirm that any single one is actually responsible for those issues:

- Cores are not perfectly synchronized: They seem to trigger more often on a core change, but setting the affinity to a single core does not fix the issue entirely. However it highly reduces it.

- Kernel ends up processing interrupts: In some cases I observed some system process waking up exactly on time, but our thread wakeup was only triggered later.

- Some other process changes the period which triggers a phase reset: I’ve observed phase shifts when NVidia’s

NVDisplay.Container.exewakes up, and it is sometimes listed in thepowercfgenergy report as requesting 1ms periods. - Timer coalescing: In order to reduce power consumption, Windows can coalesce timers. See Windows Timers Coalescing

Under high load

All the previous results are for a system with a relatively low load, most processes are idle and the CPU cores are free.

While such results are important, one must not forget to test under high load where contention might have an impact. To simulate high CPU usage, you can either use stress-ng with WSL or CpuStres from Sysinternals.

Setting a higher priority

First, let’s mention that you can (and probably should) change the thread priority if you need such a high timer resolution. There are multiple ways to do this:

SetThreadPrioritywithTHREAD_PRIORITY_HIGHEST. There is alsoTHREAD_PRIORITY_TIME_CRITICALbut you should avoid it unless you know you don’t need any system resource or will risk priority starvation which is not a good thing.- For Audio applications,

AvSetMmThreadCharacteristicsW(L"Pro Audio", &avTaskIndex)will put the thread in priorityTHREAD_PRIORITY_TIME_CRITICALand may be recognized as higher priority than a normal thread. AvSetMmThreadPriority(hAvrt, AVRT_PRIORITY_CRITICAL)after a call toAvSetMmThreadCharacteristicsW, which gives an even higher priority, I don’t recommend this as even audio frameworks such as JUCE stay on a lower priority.

Comparison

There isn’t much difference between SetThreadPriority(THREAD_PRIORITY_HIGHEST) and AvSetMmThreadPriority(AVRT_PRIORITY_CRITICAL).

This mostly changes the number of outliers which is what we want, but again, you need to make sure the OS threads will be scheduled properly or you may starve your system and make things worse. Rule of thumb is to avoid any CRITICAL priority in userland.

As for the difference between using the period and due time, the results are confusing and problematic, as they are clear opposites depending on whether timeBeginPeriod(1) is in use.

If there is no process with timeBeginPeriod(1), then using a period is the best, almost all iterations are of 1ms. However if there is such a process, a lot of iterations will be of 1.5ms or even 2ms.

For the method with dueTime, it’s the opposite… (Yes, I did double check I didn’t invert the captures, 5times.)

What about NtSetTimerResolution?

You might have seen the undocumented NtSetTimerResolution mentioned somewhere, which lets you specify 0.5ms as a time period.

Well, that’s not entirely true, when using waitable timers with 1ms period, you get more consistent timings under load… But unlike before instead of giving values at 1ms, 1.5ms, and 2ms, you instead get almost exclusively durations clustered around 1.5ms.

That said, it does let you use waitable timers with a due time <=0.5ms, which will indeed provide you with a 0.5ms timer! At the cost of using an undocumented (but seemingly stable) API.

Conclusion

Getting a high resolution timer on windows (<=1ms) is still hard due to the diverging behaviors of waitable timers in presence (or absence) of timeBeginPeriod(1) or CPU load.

Today the only way to have reliable results is to use SetWaitableTimer’s due time with a call timeBeginPeriod(1). You’re otherwise at the mercy of other processes.

As usual when going so close to the timings of the kernel clock your best bet ends up not relying on it as much as you can. This will usually involve some loss of latency (buffering) or being able to adapt to missed events (deltatime). For those who write rendering code, this is very similar to the issues you can have with VSync except at a much higher frequency. You should be using events provided by the OS where possible (DX12 / WASAPI / …).

I still think Microsoft should do something about this, having opposite results depending on CPU load and what other processes do makes it impossible to rely on such timers.

💡 While I’d rather not advertise usage of

timeBeginPeriod(1)/NtSetTimerResolution, for those who really need it… (and I mean it, please think 10 times before doing this) you can still use a mixed approach with a busy-loop:

Sleep first to get as close as possible from your target timepoint as the variance allows (0.5ms), then spinloop.

The (dirty) code is available as a Github gist here.